Mapping the Interaction Between Groundwater and the Surface Water Body of White Bear Lake, Minnesota

Melissa Hackenmueller

White

Bear Lake saw record lows in 2012 and 2013, which lead the concerned community members

to sue the Minnesota Department of Natural Resources on the claim that they had

poorly managed the groundwater withdrawals in the region; therefore, causing

the significant decline in the lake level. This lead me to the question, could

the groundwater levels be mapped throughout this region using ArcGIS and would

these water table maps also show the same pattern as the surface water? The

goals of my project entailed creating temporal water table maps of the region,

comparing these maps to each other, and then to the water levels of White Bear

Lake.

White Bear Lake is

located in Ramsey and Washington counties, which are located in east-central

Minnesota. My study area encompasses a 12 km radius around the lake (Figure 1).

The lake is underlaid by a glacial sediment aquifer and then a series of

bedrock sandstones (Figure 2). White Bear lake is a city of about 24,000 people

and is located NE of Minneapolis, Minnesota (U.S. Census, 2010).

|

| Figure 1. Yellow circle in the east-central portion of Minnesota is the extent of my study area. |

|

| Figure 2. A cross-section showing the series of unconfined aquifers that lie beneath White Bear Lake. |

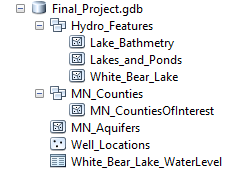

The data for this

project all came from two branches of the Minnesota state government. I found

the Minnesota counties shapefile, the roadways shapefile, and the surface water

features shapefile from the Geospatial Information Office. I found the White

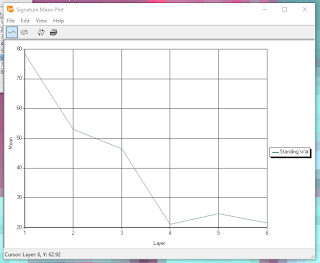

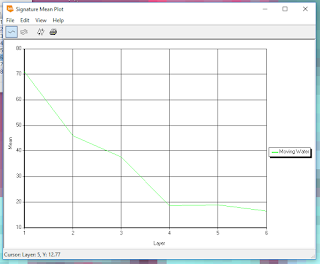

Bear Lake Water Levels (Figure 3) and the monitoring well data from the

Department of Natural Resources.

|

| Figure 3. This graph shows the water level variations in White Bear Lake since the late 1960s. |

Methods

The first step in this

project was to use the select by attribute tool to narrow down my data sets to

my study area. Next, I used the proximity > buffer tool to create a 12 km

radius around White Bear Lake to map the extent of my study area (Figure 4).

The last step of pre-processing was to digitize the monitoring wells within the

study area. Since my goal was to look at the temporal change of the water table

I digitized the wells for 1978, 1986, 1998, 2007, and 2017. Another goal was to

determine if the groundwater followed the same pattern of highs and lows as the

surface water, so I chose two major highs and two major lows in the record and

digitized wells for those times too. As seen in Figure 3 above, White Bear Lake

had a major high in 1986 followed by a major low in 1991 and a major high in

2003 followed by a major low in 2013. These are the years that I choose to

digitize wells for.

|

| Figure 4. This map shows the 12 km buffer that I created around White Bear Lake to show the extent of my groundwater study area. |

The major and most

important method of this project was to interpolate between the digitized well

location to create a water table map for the entire study area. I used the

inverse distance weighted (IDW) tool to perform this interpolation. I used IDW

eight separate times in order to get water table maps over time and to map the

highs and lows determined above. Before I analyzed the interpolation maps, I

made sure that all of the ranges of water table levels were homogeneous to

ensure that they could be compared and contrasted easily and accurately.

Results and Analysis

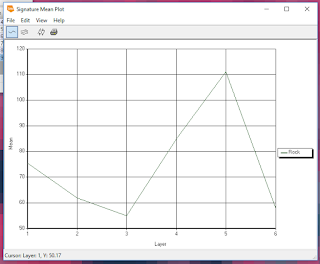

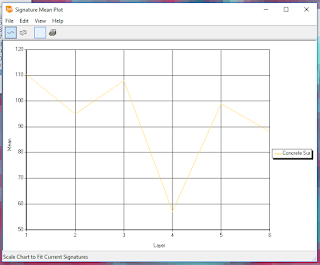

Figure 5 is all of the

interpolated water table maps. Overall, the water table maps throughout the 50

years didn’t change dramatically at all. The first thing I noticed during

analysis was that the water table became lower as you moved to the SW. Within

1986 and 1998 the water table also became lower to the NE. The highest water

table levels were consistently to the North with the center of my study area

always having relatively average water table levels for the region. The

interpolation becomes more complicated as you move through time, which is

probably due to higher concentration of wells. In 2007 there is a very low spot

in the NW corner of White Bear Lake, I believe this may be an out liar or

mis-reading of some sort, as it doesn’t fit the other well readings in that

area. Overall, through time the water table doesn’t change very much.

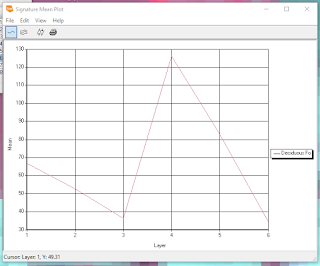

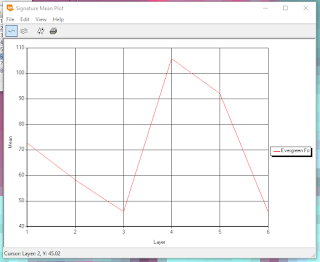

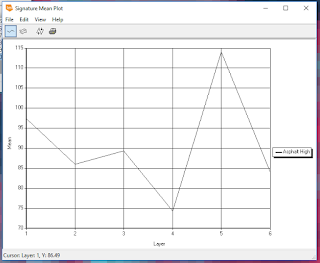

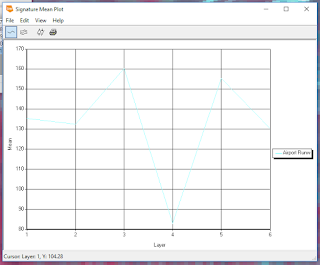

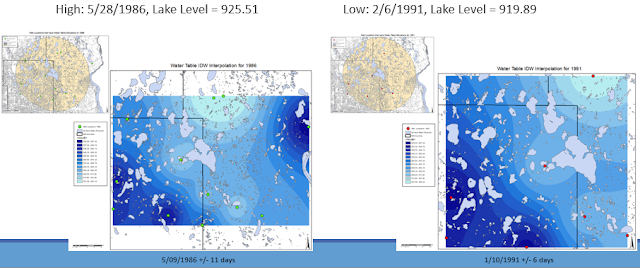

I then took a look at

the water table maps I made during the highs and lows record on White Bear

Lake (Figure 6 and Figure 7). When comparing the high of 1986 and the low of 1991, nothing stands out

in correspondence to the 6 foot drop in surface water. There is slightly higher

peaks in 1986, but nothing dramatic that represents the significant changes in

surface water. I then took a look at the 2003 high and the 2013 low, to see if

this had some difference. To my surprise, the water table maps looked very more

similar. There is a low shown in the NW portion of White Bear lake in the 2013

water table map but this low is also shown in the 2003 water table map. The

dramatic surface water changes, didn’t seem to be represented in the water

table maps that I have created.

|

| Figure 6. These maps compare a time of high and low surface water levels with the corresponding groundwater levels. The left is the high time of 1986 and the right is the low period of 1991. |

Conclusions

Overall, the water table

maps that I created using IDW interpolation didn’t accurately represent the

water table in real life. I came to this conclusion because of the little

variations in the groundwater in reflectance to the large variations within the

surface water levels. Groundwater and surface water are very closely related to

one another; therefore, a much closer relationship is expected. My future work

would include a more dense amount of wells in my study area to create a more

accurate water table map. I could also quantify the groundwater pumping in the

area to determine the cone of depression that is interacting with White Bear

Lake. Also, incorporating precipitation into the equation would allow for a

much more comprehension evaluation of the causes of decline in White Bear Lake.

Data Sources

Minnesota

Department of Natural Resources. GIS Shapefiles. State of Minnesota.

Minnesota

Geospatial Information Office. GIS Shapefiles. State of Minnesota.

U.S.

Census, 2010.

Literature Review References

Kalivas,

D. P., Kollias, V. J., and Karantounias, G., 2003, A GIS for the assessment of

the spatio-temporal changes of the Kotychi Lagoon, western Peloponnese, Greece:

Water Resources Management, v. 17, no. 1, p. 19-36.

Moeckel,

J., Ekman, J., 2016, Findings of Face and Order White Bear Lake Protective

Elevation White Bear Lake, Ramsey and Washington Counties, Minnesota Department

of Natural Resources.

Neto,

R. T. D., Santos, C. A. G., Mulligan, K., and Barbato, L., 2016, Spatial and

temporal water-level variations in the Texas portion of the Ogallala Aquifer:

Natural Hazards, v. 80, no. 1, p. 351-365.